Google 生成式语言语义检索器¶

在本 Notebook 中,我们将快速展示如何开始使用 Google 的生成式语言语义检索器。它提供专门的嵌入模型用于高质量检索,并提供一个经过调优的模型,用于生成具有可定制安全设置的基于事实的输出。我们还将向您展示一些高级示例,说明如何结合 LlamaIndex 的强大功能和 Google 的这一独特产品。

安装¶

%pip install llama-index-llms-gemini

%pip install llama-index-vector-stores-google

%pip install llama-index-indices-managed-google

%pip install llama-index-response-synthesizers-google

%pip install llama-index

%pip install "google-ai-generativelanguage>=0.4,<=1.0"

%pip install google-auth-oauthlib

from google.oauth2 import service_account

from llama_index.vector_stores.google import set_google_config

credentials = service_account.Credentials.from_service_account_file(

"service_account_key.json",

scopes=[

"https://www.googleapis.com/auth/generative-language.retriever",

],

)

set_google_config(auth_credentials=credentials)

下载数据¶

!mkdir -p 'data/paul_graham/'

!wget 'https://raw.githubusercontent.com/run-llama/llama_index/main/docs/docs/examples/data/paul_graham/paul_graham_essay.txt' -O 'data/paul_graham/paul_graham_essay.txt'

设置¶

首先,让我们在幕后创建一些辅助函数。

import llama_index.core.vector_stores.google.generativeai.genai_extension as genaix

from typing import Iterable

from random import randrange

LLAMA_INDEX_COLAB_CORPUS_ID_PREFIX = f"llama-index-colab"

SESSION_CORPUS_ID_PREFIX = (

f"{LLAMA_INDEX_COLAB_CORPUS_ID_PREFIX}-{randrange(1000000)}"

)

def corpus_id(num_id: int) -> str:

return f"{SESSION_CORPUS_ID_PREFIX}-{num_id}"

SESSION_CORPUS_ID = corpus_id(1)

def list_corpora() -> Iterable[genaix.Corpus]:

client = genaix.build_semantic_retriever()

yield from genaix.list_corpora(client=client)

def delete_corpus(*, corpus_id: str) -> None:

client = genaix.build_semantic_retriever()

genaix.delete_corpus(corpus_id=corpus_id, client=client)

def cleanup_colab_corpora():

for corpus in list_corpora():

if corpus.corpus_id.startswith(LLAMA_INDEX_COLAB_CORPUS_ID_PREFIX):

try:

delete_corpus(corpus_id=corpus.corpus_id)

print(f"Deleted corpus {corpus.corpus_id}.")

except Exception:

pass

# Remove any previously leftover corpora from this colab.

cleanup_colab_corpora()

基本用法¶

语料库 是 文档 的集合。文档 是分解成 块 的文本主体。

from llama_index.core import SimpleDirectoryReader

from llama_index.indices.managed.google import GoogleIndex

from llama_index.core import Response

import time

# Create a corpus.

index = GoogleIndex.create_corpus(

corpus_id=SESSION_CORPUS_ID, display_name="My first corpus!"

)

print(f"Newly created corpus ID is {index.corpus_id}.")

# Ingestion.

documents = SimpleDirectoryReader("./data/paul_graham/").load_data()

index.insert_documents(documents)

让我们检查一下我们摄取的内容。

for corpus in list_corpora():

print(corpus)

让我们向索引提问。

# Querying.

query_engine = index.as_query_engine()

response = query_engine.query("What did Paul Graham do growing up?")

assert isinstance(response, Response)

# Show response.

print(f"Response is {response.response}")

# Show cited passages that were used to construct the response.

for cited_text in [node.text for node in response.source_nodes]:

print(f"Cited text: {cited_text}")

# Show answerability. 0 means not answerable from the passages.

# 1 means the model is certain the answer can be provided from the passages.

if response.metadata:

print(

f"Answerability: {response.metadata.get('answerable_probability', 0)}"

)

创建语料库¶

有多种方法可以创建语料库。

# The Google server will provide a corpus ID for you.

index = GoogleIndex.create_corpus(display_name="My first corpus!")

print(index.corpus_id)

# You can also provide your own corpus ID. However, this ID needs to be globally

# unique. You will get an exception if someone else has this ID already.

index = GoogleIndex.create_corpus(

corpus_id="my-first-corpus", display_name="My first corpus!"

)

# If you do not provide any parameter, Google will provide ID and a default

# display name for you.

index = GoogleIndex.create_corpus()

重用语料库¶

您创建的语料库会持久保存在您的 Google 帐户下的服务器上。您可以使用其 ID 重新获取句柄。然后,您可以查询它、向其中添加更多文档等。

# Use a previously created corpus.

index = GoogleIndex.from_corpus(corpus_id=SESSION_CORPUS_ID)

# Query it again!

query_engine = index.as_query_engine()

response = query_engine.query("Which company did Paul Graham build?")

assert isinstance(response, Response)

# Show response.

print(f"Response is {response.response}")

列出和删除语料库¶

有关更多文档,请参阅 Python 库 google-generativeai。

加载文档¶

LlamaIndex 中的许多节点解析器和文本分割器都会自动为每个节点添加一个 `source_node`,以便将其与文件关联,例如:

relationships={

NodeRelationship.SOURCE: RelatedNodeInfo(

node_id="abc-123",

metadata={"file_name": "Title for the document"},

)

},

GoogleIndex 和 GoogleVectorStore 都识别此 source node,并将在 Google 服务器上您的语料库下自动创建文档。

如果您正在编写自己的分块器,也应提供此 source node 关系,如下所示

from llama_index.core.schema import NodeRelationship, RelatedNodeInfo, TextNode

index = GoogleIndex.from_corpus(corpus_id=SESSION_CORPUS_ID)

index.insert_nodes(

[

TextNode(

text="It was the best of times.",

relationships={

NodeRelationship.SOURCE: RelatedNodeInfo(

node_id="123",

metadata={"file_name": "Tale of Two Cities"},

)

},

),

TextNode(

text="It was the worst of times.",

relationships={

NodeRelationship.SOURCE: RelatedNodeInfo(

node_id="123",

metadata={"file_name": "Tale of Two Cities"},

)

},

),

TextNode(

text="Bugs Bunny: Wassup doc?",

relationships={

NodeRelationship.SOURCE: RelatedNodeInfo(

node_id="456",

metadata={"file_name": "Bugs Bunny Adventure"},

)

},

),

]

)

如果您的节点没有 source node,Google 服务器将把您的节点放在您的语料库下的一个默认文档中。

列出和删除文档¶

有关更多文档,请参阅 Python 库 google-generativeai。

查询语料库¶

Google 的查询引擎由一个经过特殊调优的 LLM 提供支持,该 LLM 根据检索到的段落进行响应。对于每个响应,都会返回一个 可回答性概率,以指示 LLM 对从检索到的段落回答问题的信心程度。

此外,Google 的查询引擎支持 回答风格,例如 ABSTRACTIVE(简洁但抽象)、EXTRACTIVE(非常简短且提取)和 VERBOSE(额外详细信息)。

该引擎还支持 安全设置。

from google.ai.generativelanguage import (

GenerateAnswerRequest,

HarmCategory,

SafetySetting,

)

index = GoogleIndex.from_corpus(corpus_id=SESSION_CORPUS_ID)

query_engine = index.as_query_engine(

# We recommend temperature between 0 and 0.2.

temperature=0.2,

# See package `google-generativeai` for other voice styles.

answer_style=GenerateAnswerRequest.AnswerStyle.ABSTRACTIVE,

# See package `google-generativeai` for additional safety settings.

safety_setting=[

SafetySetting(

category=HarmCategory.HARM_CATEGORY_SEXUALLY_EXPLICIT,

threshold=SafetySetting.HarmBlockThreshold.BLOCK_LOW_AND_ABOVE,

),

SafetySetting(

category=HarmCategory.HARM_CATEGORY_VIOLENCE,

threshold=SafetySetting.HarmBlockThreshold.BLOCK_ONLY_HIGH,

),

],

)

response = query_engine.query("What was Bugs Bunny's favorite saying?")

print(response)

有关更多文档,请参阅 Python 库 google-generativeai。

解释响应¶

from llama_index.core import Response

response = query_engine.query("What were Paul Graham's achievements?")

assert isinstance(response, Response)

# Show response.

print(f"Response is {response.response}")

# Show cited passages that were used to construct the response.

for cited_text in [node.text for node in response.source_nodes]:

print(f"Cited text: {cited_text}")

# Show answerability. 0 means not answerable from the passages.

# 1 means the model is certain the answer can be provided from the passages.

if response.metadata:

print(

f"Answerability: {response.metadata.get('answerable_probability', 0)}"

)

高级 RAG¶

GoogleIndex 基于 GoogleVectorStore 和 GoogleTextSynthesizer 构建。这些组件可以与 LlamaIndex 中的其他强大构建块相结合,以生成高级 RAG 应用。

下面我们展示几个示例。

from llama_index.llms.gemini import Gemini

GEMINI_API_KEY = "" # @param {type:"string"}

gemini = Gemini(api_key=GEMINI_API_KEY)

重排序器 + Google 检索器¶

将内容转换为向量是一个有损的过程。基于 LLM 的重排序通过使用 LLM 对检索到的内容进行重排序来弥补这一点,因为 LLM 可以访问实际查询和原文段落,所以具有更高的保真度。

from llama_index.response_synthesizers.google import GoogleTextSynthesizer

from llama_index.vector_stores.google import GoogleVectorStore

from llama_index.core import VectorStoreIndex

from llama_index.core.postprocessor import LLMRerank

from llama_index.core.query_engine import RetrieverQueryEngine

from llama_index.core.retrievers import VectorIndexRetriever

# Set up the query engine with a reranker.

store = GoogleVectorStore.from_corpus(corpus_id=SESSION_CORPUS_ID)

index = VectorStoreIndex.from_vector_store(

vector_store=store,

)

response_synthesizer = GoogleTextSynthesizer.from_defaults(

temperature=0.2,

answer_style=GenerateAnswerRequest.AnswerStyle.ABSTRACTIVE,

)

reranker = LLMRerank(

top_n=10,

llm=gemini,

)

query_engine = RetrieverQueryEngine.from_args(

retriever=VectorIndexRetriever(

index=index,

similarity_top_k=20,

),

node_postprocessors=[reranker],

response_synthesizer=response_synthesizer,

)

# Query.

response = query_engine.query("What were Paul Graham's achievements?")

print(response)

多查询 + Google 检索器¶

有时,用户的查询可能过于复杂。如果您将原始查询分解为更小、更集中的查询,您可能会获得更好的检索结果。

from llama_index.core.indices.query.query_transform.base import (

StepDecomposeQueryTransform,

)

from llama_index.core.query_engine import MultiStepQueryEngine

# Set up the query engine with multi-turn query-rewriter.

store = GoogleVectorStore.from_corpus(corpus_id=SESSION_CORPUS_ID)

index = VectorStoreIndex.from_vector_store(

vector_store=store,

)

response_synthesizer = GoogleTextSynthesizer.from_defaults(

temperature=0.2,

answer_style=GenerateAnswerRequest.AnswerStyle.ABSTRACTIVE,

)

single_step_query_engine = index.as_query_engine(

similarity_top_k=10,

response_synthesizer=response_synthesizer,

)

step_decompose_transform = StepDecomposeQueryTransform(

llm=gemini,

verbose=True,

)

query_engine = MultiStepQueryEngine(

query_engine=single_step_query_engine,

query_transform=step_decompose_transform,

response_synthesizer=response_synthesizer,

index_summary="Ask me anything.",

num_steps=6,

)

# Query.

response = query_engine.query("What were Paul Graham's achievements?")

print(response)

HyDE + Google 检索器¶

当您可以编写能够生成与真实答案具有许多共同特征的**假设性答案**的提示时,您可以尝试 HyDE!

from llama_index.core.indices.query.query_transform import HyDEQueryTransform

from llama_index.core.query_engine import TransformQueryEngine

# Set up the query engine with multi-turn query-rewriter.

store = GoogleVectorStore.from_corpus(corpus_id=SESSION_CORPUS_ID)

index = VectorStoreIndex.from_vector_store(

vector_store=store,

)

response_synthesizer = GoogleTextSynthesizer.from_defaults(

temperature=0.2,

answer_style=GenerateAnswerRequest.AnswerStyle.ABSTRACTIVE,

)

base_query_engine = index.as_query_engine(

similarity_top_k=10,

response_synthesizer=response_synthesizer,

)

hyde = HyDEQueryTransform(

llm=gemini,

include_original=False,

)

hyde_query_engine = TransformQueryEngine(base_query_engine, hyde)

# Query.

response = query_engine.query("What were Paul Graham's achievements?")

print(response)

多查询 + 重排序器 + HyDE + Google 检索器¶

或者将它们全部结合起来!

# Google's retriever and AQA model setup.

store = GoogleVectorStore.from_corpus(corpus_id=SESSION_CORPUS_ID)

index = VectorStoreIndex.from_vector_store(

vector_store=store,

)

response_synthesizer = GoogleTextSynthesizer.from_defaults(

temperature=0.2, answer_style=GenerateAnswerRequest.AnswerStyle.ABSTRACTIVE

)

# Reranker setup.

reranker = LLMRerank(

top_n=10,

llm=gemini,

)

single_step_query_engine = index.as_query_engine(

similarity_top_k=20,

node_postprocessors=[reranker],

response_synthesizer=response_synthesizer,

)

# HyDE setup.

hyde = HyDEQueryTransform(

llm=gemini,

include_original=False,

)

hyde_query_engine = TransformQueryEngine(single_step_query_engine, hyde)

# Multi-query setup.

step_decompose_transform = StepDecomposeQueryTransform(

llm=gemini, verbose=True

)

query_engine = MultiStepQueryEngine(

query_engine=hyde_query_engine,

query_transform=step_decompose_transform,

response_synthesizer=response_synthesizer,

index_summary="Ask me anything.",

num_steps=6,

)

# Query.

response = query_engine.query("What were Paul Graham's achievements?")

print(response)

清理在 Colab 中创建的语料库¶

cleanup_colab_corpora()

附录:使用用户凭据设置 OAuth¶

请按照 OAuth 快速入门 设置使用用户凭据的 OAuth。以下是文档中所需的步骤概述。

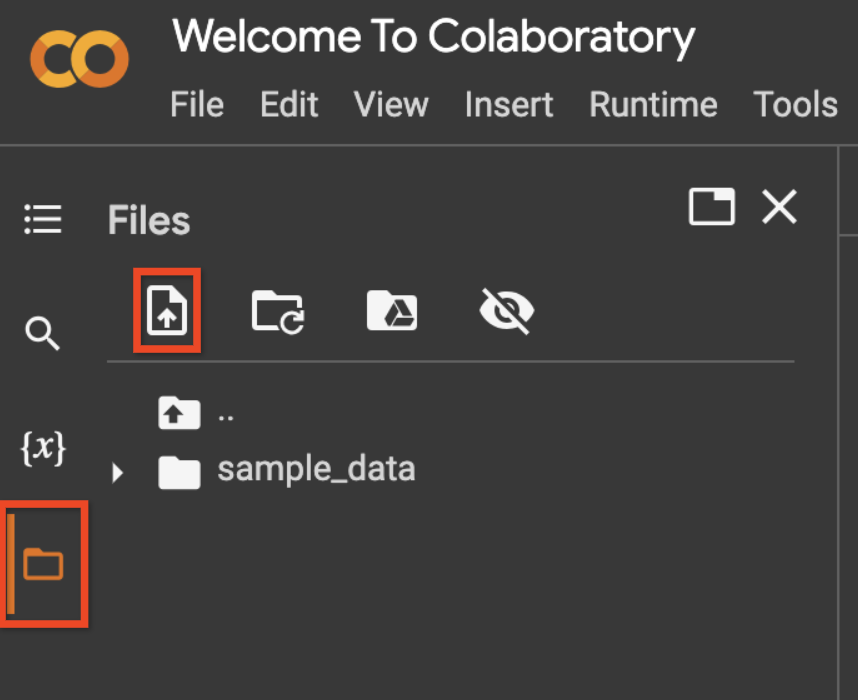

如果您想在 Colab 中运行此 notebook,首先使用“File > Upload”选项上传您的 `client_secret*.json` 文件。

将上传的文件重命名为 `client_secret.json` 或更改下面代码中的变量 `client_file_name`。

# Replace TODO-your-project-name with the project used in the OAuth Quickstart

project_name = "TODO-your-project-name" # @param {type:"string"}

# Replace [email protected] with the email added as a test user in the OAuth Quickstart

email = "[email protected]" # @param {type:"string"}

# Replace client_secret.json with the client_secret_* file name you uploaded.

client_file_name = "client_secret.json"

# IMPORTANT: Follow the instructions from the output - you must copy the command

# to your terminal and copy the output after authentication back here.

!gcloud config set project $project_name

!gcloud config set account $email

# NOTE: The simplified project setup in this tutorial triggers a "Google hasn't verified this app." dialog.

# This is normal, click "Advanced" -> "Go to [app name] (unsafe)"

!gcloud auth application-default login --no-browser --client-id-file=$client_file_name --scopes="https://www.googleapis.com/auth/generative-language.retriever,https://www.googleapis.com/auth/cloud-platform"

这将为您提供一个 URL,您应该在本地浏览器中输入该 URL。按照说明完成身份验证和授权。