Videodb 检索器

RAG:视频上的多模态搜索和视频结果流式播放 📺¶

构建用于文本的 RAG 管道相对简单,这得益于为解析、索引和检索文本数据而开发的工具。

然而,将 RAG 模型应用于视频内容带来了更大的挑战。视频结合了视觉、听觉和文本元素,需要更强的处理能力和更复杂的视频管道。

虽然大型语言模型 (LLM) 在处理文本方面表现出色,但在帮助您消费或创建视频片段方面却力有不逮。VideoDB 为您的 MP4 文件提供了复杂的数据库抽象,使得可以在视频数据上使用 LLM。借助 VideoDB,您不仅可以分析搜索结果,还可以即时观看其视频流。

VideoDB 是一个无服务器数据库,旨在简化视频内容的存储、搜索、编辑和流式播放。VideoDB 通过构建索引以及开发用于查询和浏览视频内容的接口,提供对顺序视频数据的随机访问。访问 docs.videodb.io 了解更多信息。

在本笔记本中,我们介绍了 VideoDBRetriever,这是一个专门设计的工具,旨在简化视频内容 RAG 管道的创建,无需处理复杂的视频基础设施。

🔑 要求¶

要连接到 VideoDB,只需获取 API 密钥并创建连接。可以通过设置环境变量 VIDEO_DB_API_KEY 来完成。您可以从 👉🏼 VideoDB 控制台 获取。 (前 50 次上传免费,无需信用卡!)

从 OpenAI 平台获取 OPENAI_API_KEY,用于 llama_index 响应合成器。

import os

os.environ["VIDEO_DB_API_KEY"] = ""

os.environ["OPENAI_API_KEY"] = ""

%pip install videodb

%pip install llama-index

%pip install llama-index-retrievers-videodb

🛠 使用 VideoDBRetriever 为单个视频构建 RAG¶

from videodb import connect

# connect to VideoDB

conn = connect()

coll = conn.create_collection(

name="VideoDB Retrievers", description="VideoDB Retrievers"

)

# upload videos to default collection in VideoDB

print("Uploading Video")

video = coll.upload(url="https://www.youtube.com/watch?v=aRgP3n0XiMc")

print(f"Video uploaded with ID: {video.id}")

# video = coll.get_video("m-b6230808-307d-468a-af84-863b2c321f05")

Uploading Video Video uploaded with ID: m-a758f9bb-f769-484a-9d54-02417ccfe7e6

coll = conn.get_collection():返回默认集合对象。coll.get_videos():返回集合中所有视频的列表。coll.get_video(video_id):根据给定的video_id返回视频对象。

🗣️ 第二步:从语音内容中索引与搜索¶

视频可以视为具有不同模态的数据。首先,我们将处理语音内容。

🗣️ 索引语音内容¶

print("Indexing spoken content in Video...")

video.index_spoken_words()

Indexing spoken content in Video...

100%|████████████████████████████████████████████████████████████████████████████████████████████████████| 100/100 [00:48<00:00, 2.08it/s]

🗣️ 从语音索引中检索相关节点¶

我们将使用 VideoDBRetriever 从我们的索引内容中检索相关节点。视频 ID 应作为参数传递,并且 index_type 应设置为 IndexType.spoken_word。

您可以在实验后配置 score_threshold 和 result_threshold。

from llama_index.retrievers.videodb import VideoDBRetriever

from videodb import SearchType, IndexType

spoken_retriever = VideoDBRetriever(

collection=coll.id,

video=video.id,

search_type=SearchType.semantic,

index_type=IndexType.spoken_word,

score_threshold=0.1,

)

spoken_query = "Nationwide exams"

nodes_spoken_index = spoken_retriever.retrieve(spoken_query)

🗣️️️ 查看结果: 💬 文本¶

我们将使用相关节点并通过 llamaindex 合成响应

from llama_index.core import get_response_synthesizer

response_synthesizer = get_response_synthesizer()

response = response_synthesizer.synthesize(

spoken_query, nodes=nodes_spoken_index

)

print(response)

The results of the nationwide exams were eagerly awaited all day.

🗣️ 查看结果: 🎥 视频片段¶

对于每个与查询相关的检索到的节点,元数据中的 start 和 end 字段表示该节点覆盖的时间间隔。

我们将使用 VideoDB 的可编程流根据这些节点的时间戳生成相关的视频片段流。

from videodb import play_stream

results = [

(node.metadata["start"], node.metadata["end"])

for node in nodes_spoken_index

]

stream_link = video.generate_stream(results)

play_stream(stream_link)

'https://console.videodb.io/player?url=https://dseetlpshk2tb.cloudfront.net/v3/published/manifests/3c108acd-e459-494a-bc17-b4768c78e5df.m3u8'

📸️ 第三步:从视觉内容中索引与搜索¶

from videodb import SceneExtractionType

print("Indexing Visual content in Video...")

# Index scene content

index_id = video.index_scenes(

extraction_type=SceneExtractionType.shot_based,

extraction_config={"frame_count": 3},

prompt="Describe the scene in detail",

)

video.get_scene_index(index_id)

print(f"Scene Index successful with ID: {index_id}")

Indexing Visual content in Video... Scene Index successful with ID: 990733050d6fd4f5

📸️ 从场景索引中检索相关节点¶

就像我们为语音索引使用 VideoDBRetriever 一样,我们将为场景索引使用它。在这里,我们需要将 index_type 设置为 IndexType.scene 并传递 scene_index_id。

from llama_index.retrievers.videodb import VideoDBRetriever

from videodb import SearchType, IndexType

scene_retriever = VideoDBRetriever(

collection=coll.id,

video=video.id,

search_type=SearchType.semantic,

index_type=IndexType.scene,

scene_index_id=index_id,

score_threshold=0.1,

)

scene_query = "accident scenes"

nodes_scene_index = scene_retriever.retrieve(scene_query)

📸️️️ 查看结果: 💬 文本¶

from llama_index.core import get_response_synthesizer

response_synthesizer = get_response_synthesizer()

response = response_synthesizer.synthesize(

scene_query, nodes=nodes_scene_index

)

print(response)

The scenes described do not depict accidents but rather dynamic and intense scenarios involving motion, urgency, and tension in urban settings at night.

📸 ️ 查看结果: 🎥 视频片段¶

from videodb import play_stream

results = [

(node.metadata["start"], node.metadata["end"])

for node in nodes_scene_index

]

stream_link = video.generate_stream(results)

play_stream(stream_link)

'https://console.videodb.io/player?url=https://dseetlpshk2tb.cloudfront.net/v3/published/manifests/ae74e9da-13bf-4056-8cfa-0267087b74d7.m3u8'

🛠️ 第四步:简单的多模态 RAG - 结合两种模态的结果¶

我们希望在我们的视频库中实现这样的多模态查询

📸🗣️ "显示 1.事故场景 2.关于全国性考试的讨论"

有很多方法可以创建多模态 RAG,为了简单起见,我们选择一种简单的方法

- 🧩 查询转换:将查询分成两个部分,分别用于场景索引和语音索引。

- 🔎 为每种模态查找相关节点:使用

VideoDBRetriever从语音索引和场景索引中查找相关节点。 - ✏️ 查看结果:文本:使用相关节点合成文本响应,整合来自两个索引的结果以精确识别视频片段。

- 🎥 查看结果:视频片段:整合来自两个索引的结果以精确识别视频片段。

要查看更高级的多模态技术,请查阅我们的高级多模态指南。

🧩 查询转换¶

from llama_index.llms.openai import OpenAI

def split_spoken_visual_query(query):

transformation_prompt = """

Divide the following query into two distinct parts: one for spoken content and one for visual content. The spoken content should refer to any narration, dialogue, or verbal explanations and The visual content should refer to any images, videos, or graphical representations. Format the response strictly as:\nSpoken: <spoken_query>\nVisual: <visual_query>\n\nQuery: {query}

"""

prompt = transformation_prompt.format(query=query)

response = OpenAI(model="gpt-4").complete(prompt)

divided_query = response.text.strip().split("\n")

spoken_query = divided_query[0].replace("Spoken:", "").strip()

scene_query = divided_query[1].replace("Visual:", "").strip()

return spoken_query, scene_query

query = "Show me 1.Accident Scene 2.Discussion about nationwide exams "

spoken_query, scene_query = split_spoken_visual_query(query)

print("Query for Spoken retriever : ", spoken_query)

print("Query for Scene retriever : ", scene_query)

Query for Spoken retriever : Discussion about nationwide exams Query for Scene retriever : Accident Scene

🔎 为每种模态查找相关节点¶

from videodb import SearchType, IndexType

# Retriever for Spoken Index

spoken_retriever = VideoDBRetriever(

collection=coll.id,

video=video.id,

search_type=SearchType.semantic,

index_type=IndexType.spoken_word,

score_threshold=0.1,

)

# Retriever for Scene Index

scene_retriever = VideoDBRetriever(

collection=coll.id,

video=video.id,

search_type=SearchType.semantic,

index_type=IndexType.scene,

scene_index_id=index_id,

score_threshold=0.1,

)

# Fetch relevant nodes for Spoken index

nodes_spoken_index = spoken_retriever.retrieve(spoken_query)

# Fetch relevant nodes for Scene index

nodes_scene_index = scene_retriever.retrieve(scene_query)

️💬️ 查看结果:文本¶

response_synthesizer = get_response_synthesizer()

response = response_synthesizer.synthesize(

query, nodes=nodes_scene_index + nodes_spoken_index

)

print(response)

The first scene depicts a dynamic and intense scenario in an urban setting at night, involving a motorcycle chase with a figure possibly dodging away. The second scene portrays a dramatic escape or rescue situation with characters in motion alongside a large truck. The discussion about nationwide exams involves a conversation between a character and their mother about exam results and studying.

🎥 查看结果:视频片段¶

从每种模态中,我们都检索到了在该特定模态(在本例中为语义和场景/视觉)内与查询相关的结果。

每个节点在元数据中都有 start 和 end 字段,表示该节点覆盖的时间间隔。

有很多方法可以合成结果,目前我们将使用一种简单的方法

Union(并集):此方法获取所有节点的所有时间戳,创建一个全面的列表,包含所有相关时间,即使某些时间戳仅出现在一种模态中。

另一种方法可以是 Intersection(交集)

Intersection(交集):此方法仅包含存在于每个节点中的时间戳,从而生成一个较小的列表,其中包含在所有模态中普遍相关的时间。

from videodb import play_stream

def merge_intervals(intervals):

if not intervals:

return []

intervals.sort(key=lambda x: x[0])

merged = [intervals[0]]

for interval in intervals[1:]:

if interval[0] <= merged[-1][1]:

merged[-1][1] = max(merged[-1][1], interval[1])

else:

merged.append(interval)

return merged

# Extract timestamps from both relevant nodes

results = [

[node.metadata["start"], node.metadata["end"]]

for node in nodes_spoken_index + nodes_scene_index

]

merged_results = merge_intervals(results)

# Use Videodb to create a stream of relevant clips

stream_link = video.generate_stream(merged_results)

play_stream(stream_link)

'https://console.videodb.io/player?url=https://dseetlpshk2tb.cloudfront.net/v3/published/manifests/91b08b39-c72f-4e33-ad1c-47a2ea11ac17.m3u8'

🛠 使用 VideoDBRetriever 为视频集合构建 RAG¶

向我们的集合添加更多视频¶

video_2 = coll.upload(url="https://www.youtube.com/watch?v=kMRX3EA68g4")

🗣️ 索引语音内容¶

video_2.index_spoken_words()

📸 索引场景¶

from videodb import SceneExtractionType

print("Indexing Visual content in Video...")

# Index scene content

index_id = video_2.index_scenes(

extraction_type=SceneExtractionType.shot_based,

extraction_config={"frame_count": 3},

prompt="Describe the scene in detail",

)

video_2.get_scene_index(index_id)

print(f"Scene Index successful with ID: {index_id}")

[Video(id=m-b6230808-307d-468a-af84-863b2c321f05, collection_id=c-4882e4a8-9812-4921-80ff-b77c9c4ab4e7, stream_url=https://dseetlpshk2tb.cloudfront.net/v3/published/manifests/528623c2-3a8e-4c84-8f05-4dd74f1a9977.m3u8, player_url=https://console.dev.videodb.io/player?url=https://dseetlpshk2tb.cloudfront.net/v3/published/manifests/528623c2-3a8e-4c84-8f05-4dd74f1a9977.m3u8, name=Death note - episode 1 (english dubbed) | HD, description=None, thumbnail_url=None, length=1366.006712), Video(id=m-f5b86106-4c28-43f1-b753-fa9b3f839dfe, collection_id=c-4882e4a8-9812-4921-80ff-b77c9c4ab4e7, stream_url=https://dseetlpshk2tb.cloudfront.net/v3/published/manifests/4273851a-46f3-4d57-bc1b-9012ce330da8.m3u8, player_url=https://console.dev.videodb.io/player?url=https://dseetlpshk2tb.cloudfront.net/v3/published/manifests/4273851a-46f3-4d57-bc1b-9012ce330da8.m3u8, name=Death note - episode 5 (english dubbed) | HD, description=None, thumbnail_url=None, length=1366.099592)]

🧩 查询转换¶

query = "Show me 1.Accident Scene 2.Kiara is speaking "

spoken_query, scene_query = split_spoken_visual_query(query)

print("Query for Spoken retriever : ", spoken_query)

print("Query for Scene retriever : ", scene_query)

Query for Spoken retriever : Kiara is speaking Query for Scene retriever : Show me Accident Scene

🔎 查找相关节点¶

from videodb import SearchType, IndexType

# Retriever for Spoken Index

spoken_retriever = VideoDBRetriever(

collection=coll.id,

search_type=SearchType.semantic,

index_type=IndexType.spoken_word,

score_threshold=0.2,

)

# Retriever for Scene Index

scene_retriever = VideoDBRetriever(

collection=coll.id,

search_type=SearchType.semantic,

index_type=IndexType.scene,

score_threshold=0.2,

)

# Fetch relevant nodes for Spoken index

nodes_spoken_index = spoken_retriever.retrieve(spoken_query)

# Fetch relevant nodes for Scene index

nodes_scene_index = scene_retriever.retrieve(scene_query)

️💬️ 查看结果:文本¶

response_synthesizer = get_response_synthesizer()

response = response_synthesizer.synthesize(

"What is kaira speaking. And tell me about accident scene",

nodes=nodes_scene_index + nodes_spoken_index,

)

print(response)

Kira is speaking about his plans and intentions regarding the agent from the bus. The accident scene captures a distressing moment where an individual is urgently making a phone call near a damaged car, with a victim lying motionless on the ground. The chaotic scene includes a bus in the background, emphasizing the severity of the tragic incident.

🎥 查看结果:视频片段¶

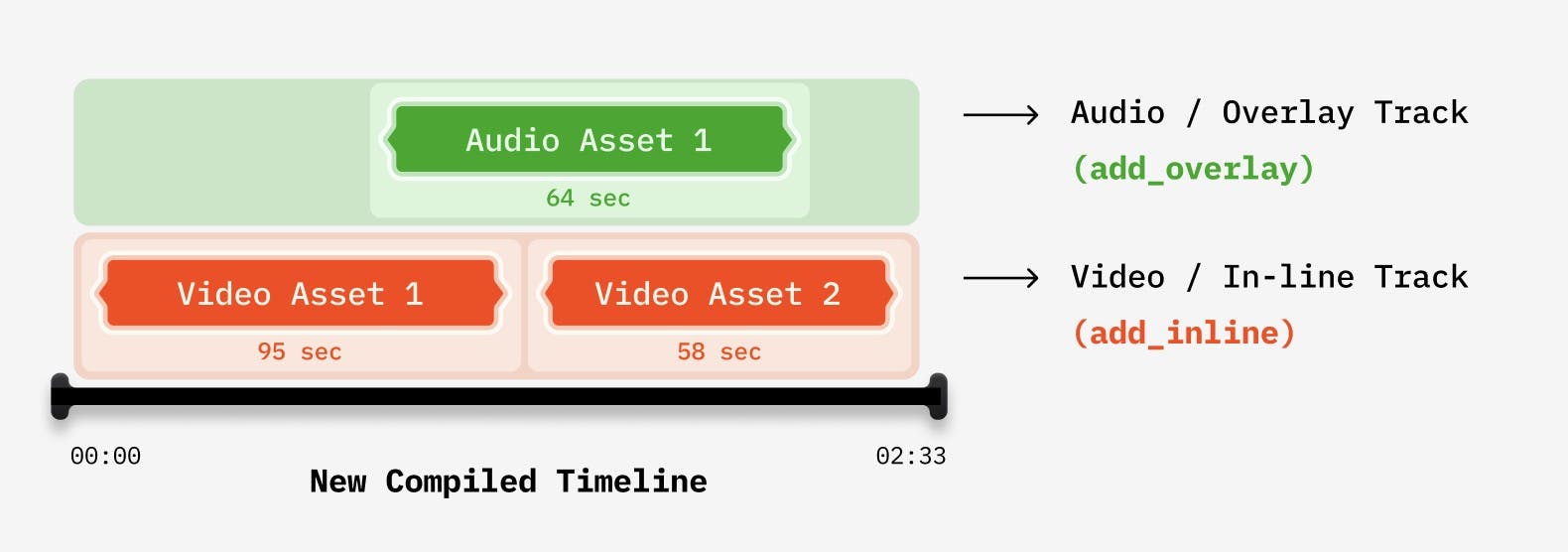

当处理涉及多个视频的编辑工作流时,我们需要创建一个 VideoAsset 的 Timeline,然后编译它们。

from videodb import connect, play_stream

from videodb.timeline import Timeline

from videodb.asset import VideoAsset

# Create a new timeline Object

timeline = Timeline(conn)

for node_obj in nodes_scene_index + nodes_spoken_index:

node = node_obj.node

# Create a Video asset for each node

node_asset = VideoAsset(

asset_id=node.metadata["video_id"],

start=node.metadata["start"],

end=node.metadata["end"],

)

# Add the asset to timeline

timeline.add_inline(node_asset)

# Generate stream for the compiled timeline

stream_url = timeline.generate_stream()

play_stream(stream_url)

'https://console.videodb.io/player?url=https://dseetlpshk2tb.cloudfront.net/v3/published/manifests/2810827b-4d80-44af-a26b-ded2a7a586f6.m3u8'

配置 VideoDBRetriever¶

⚙️ 单个视频的检索器¶

您可以传递视频对象的 id 来仅在该视频中搜索。

VideoDBRetriever(video="my_video.id")

⚙️ 视频集/集合的检索器¶

您可以传递集合的 id 来仅在该集合中搜索。

VideoDBRetriever(collection="my_coll.id")

⚙️ 不同类型索引的检索器¶

from videodb import IndexType

spoken_word = VideoDBRetriever(index_type=IndexType.spoken_word)

scene_retriever = VideoDBRetriever(index_type=IndexType.scene, scene_index_id="my_index_id")

⚙️ 配置检索器的搜索类型¶

search_type 决定了用于针对给定查询检索节点的搜索方法

from videodb import SearchType, IndexType

keyword_spoken_search = VideoDBRetriever(

search_type=SearchType.keyword,

index_type=IndexType.spoken_word

)

semantic_scene_search = VideoDBRetriever(

search_type=SearchType.semantic,

index_type=IndexType.spoken_word

)

⚙️ 配置阈值参数¶

result_threshold:是检索器返回结果数量的阈值;默认值为5score_threshold:只有分数高于score_threshold的节点才会被检索器返回;默认值为0.2

custom_retriever = VideoDBRetriever(result_threshold=2, score_threshold=0.5)